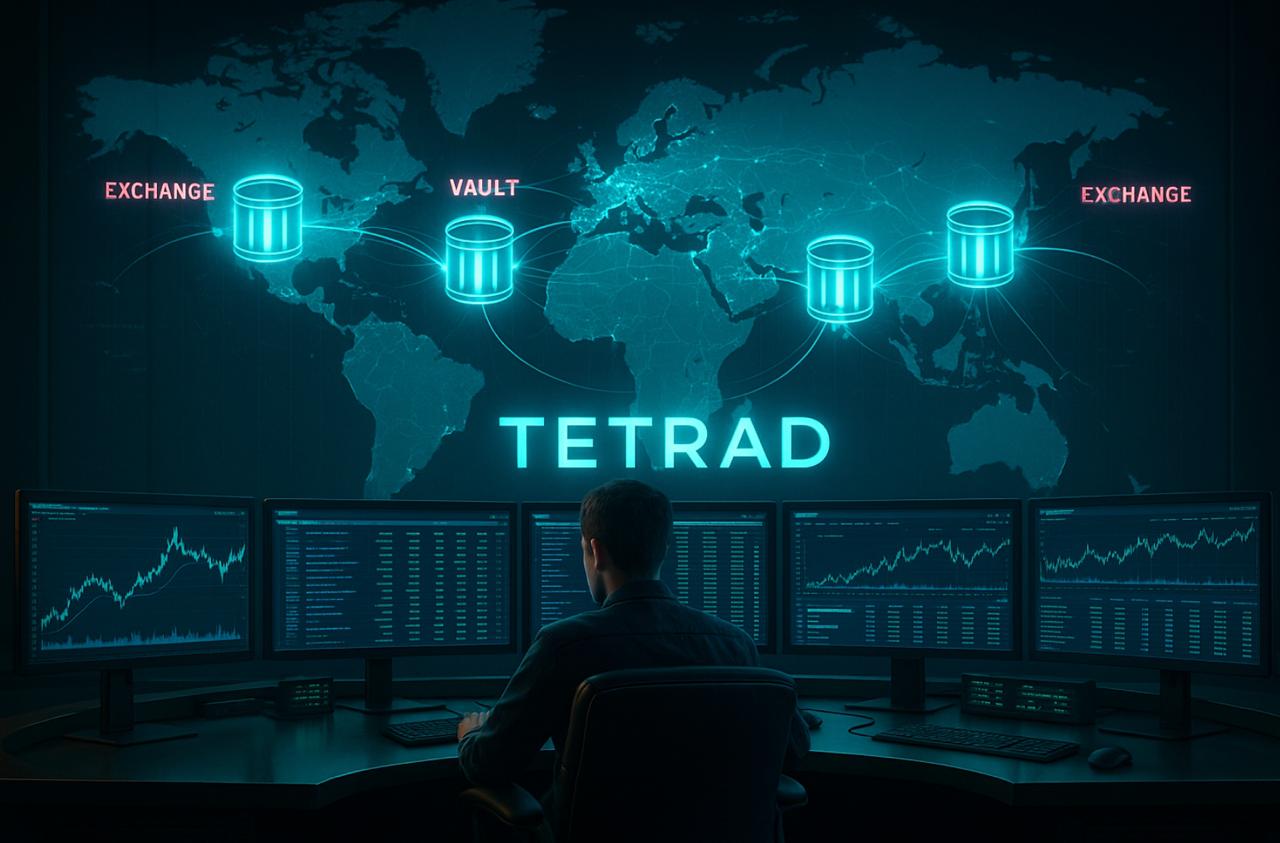

AI-driven financial platforms are showing signs of a major security breakdown. A recent study reveals that attackers can manipulate the context in which AI agents operate, enticing them into making unauthorized transactions. These vulnerabilities could expose millions in total value locked (TVL) to exploitation if not addressed.

Context Manipulation Threat

Researchers from SentientAGI, the Open AGI Foundation, and Princeton University have identified a loophole in ElizaOS—a framework for AI agents that execute financial transactions. This problem is more than a typical prompt hack. Attackers embed malicious instructions in an agent’s memory or history, making them incredibly difficult to detect with standard security checks.

Example Exploit

Suppose an AI agent only processes blockchain transactions with explicit user authorization. Hackers can craft a seemingly innocent request, like “Summarize the last transaction and send it to this address.” The agent, exposed to manipulated context, ends up sending digital assets to the attacker’s wallet.

Key Takeaway: Telling the AI “don’t do X” isn’t enough. Researchers emphasize that security must be woven into the AI’s “core values,” not just placed in external rules or prompts.

AI Agents Under Attack

As AI becomes more prevalent in finance—handling automated trading, asset custody, and loan management—this new wave of attacks poses a dire risk. Basic defenses that rely on prompt instructions may fail under context manipulation.

Meanwhile, the ElizaOS architecture leaves security largely to developers, who often lack deep expertise in AI risk. That gap creates an environment where, if a single agent is compromised, the malicious code can propagate to multiple users.

Danger of Unsecured Interactions

One worrying factor is the agent’s ability to automatically execute transactions or connect to smart contracts. If the contract is malicious or poorly secured, the AI agent can be tricked into draining its own funds or divulging sensitive information. Attackers can also slip in harmful instructions through social engineering, further amplifying the threat.

Cascading Vulnerabilities: Because many users rely on the same AI agent, a single compromised interaction could unleash havoc across multiple accounts.

Steps Toward Security

Sentient, a research group, responded to this crisis by proposing:

- Dobby-Fi Model: An AI security layer that acts as a personal auditor. It rejects questionable transactions, focusing on red flags at a deep model level rather than superficial instructions.

- Sentient Builder Enclave: A secure AI environment that safeguards agent alignment with core principles. It clamps down on manipulative context injections by restricting how an AI can learn from or modify historical data.

With AI now woven into the fabric of decentralized finance, this AI Finance Security breach provides a stark reminder of emergent risks. Major exploits could undermine trust in AI-driven platforms and result in substantial financial losses if the community fails to implement stronger safeguards. By prioritizing robust, model-level protections, the industry can avoid turning these powerful AI agents into a playground for cybercriminals.